Our goal is to develop an open reference library of cases as a reference for anyone who wants to apply AI in a functional area of the organization. Important: This is no legal advice. We take no responsibility for the correctness of the information provided. So please use it as reference only!

What data can I find here?

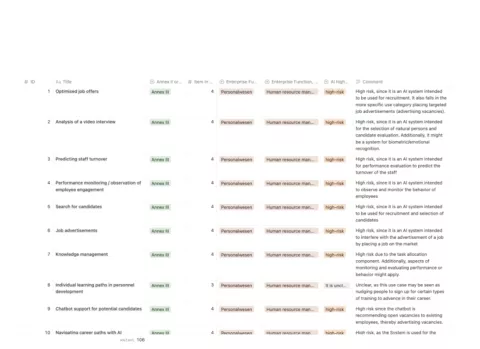

This table contains a total of 106 AI Systems (aka. Use Cases) in the enterprise along with a Risk Classification according to the European AI Act, with reference to the proposals of

- the EU Commission (April 2021)

- the EU Parlament Amendments (early/mid 2022)

- the EU Council (December 2022)

For each AI System, the table contains

Note: The English text was generated using Google Translate

- ID

- Title (EN only)

- Description (EN & DE)

- Enterprise Function (e.g. Marketing, Production, HR, Legal, … )

- Risk Class (High-Risk, Low-Risk, Prohibited)

- Classification Details

- Applicable Annex in the AI Act (II or III)

- For Annex III: The applicable sub-item (1-8)

- If high-risk or unclear classification: Comment with a rational